“I don’t think I’m going to be smarter than GPT-5.”

On February 7, 2025, OpenAI CEO Sam Altman visited Technische Universität Berlin for an on-stage conversation where he made the above comment.

Shortly after Sam’s visit to Berlin, Sherwood News posted an article about an AI test called the ARC-AGI that, until now, AI models have struggled to solve:

For five years, no AI model could score higher than 5% on the test. That changed when OpenAI announced on December 20, 2024, that its just-released “o3” model had solved the ARC-AGI test. This marked the first time any AI had passed the test — a huge leap over the other state-of-the-art models.

Impressive! And it IS tempting to look at these latest LLM reasoning models and think or say something resembling: “Oh sh*t — computers are about to replace us…!”

But I don’t think this is true.

Nor do I think it’s a good way to think, or talk, about AI.

IMPORTANT SIDE NOTE >>>

If you have any cloudiness about AI (for example: if you are not clear what a “LLM” is), I highly recommend listening to either this podcast with my friend Jason, or this podcast with my friend Josh, where I break down the most important “first things” you need to know about the current realities of this thing we’re calling “AI.”

“Trying to score better than an AI model” might be a fun game to play for a few minutes (I admit I did a couple rounds on the Sherwood article’s puzzle) but I have a large objection to this “smarter than” framing.

“Will you be smarter than GPT-5?” is actually a nonsensical question.

This framing is reductive and dangerous, because it makes a strong implication that there is only one kind of intelligence.

It also implies there’s a competition happening right now, and it looks like this:

//

You feel this, right? It’s the way SO many of these conversations are framed.

But this isn’t actually what’s happening at ALL.

You see, “intelligence” is best viewed in regards to its purpose.

Meaning, intelligence is actually more like transportation — and the “best” kind of transportation depends entirely on where you’re trying to go and what you’re trying to do.

Sometimes, you need a bike.

Sometimes you need an airplane.

Sometimes you need a freight train.

What we cannot do is make a declarative statement that a bike is simply and universally “better” than an airplane. If we want to put it back in the lens of competition, the bike would “win” certain competitions and the airplane would clearly “win” others.

Intelligence is like this, too.

If you want to be a musician, you need musical intelligence.

If you want to be an athlete, you need body intelligence.

If you want to be a nurse, you need emotional intelligence.

If you want to be an architect, you need spatial intelligence.

What the “best” kind of intelligence is depends entirely on where you’re trying to go and what you’re trying to do.

This is why the tech bro framing of “Are you smarter than X model of AI?” is, frankly, stupid.

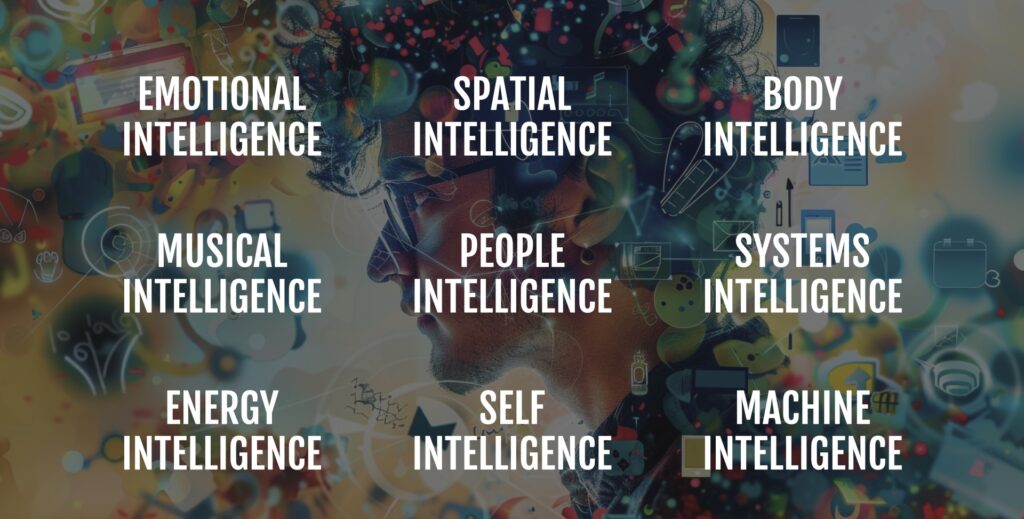

It’s much better for us to see what’s happening through a lens that looks more like this:

//

There are MANY kinds of intelligence, and they are more or less on equal footing, because what kind is “best” depends entirely on where you’re trying to go and what you’re trying to do.

To be clear: there IS a new kind of intelligence emerging.

I would vote we call it language-based Machine Intelligence. This is a much more specific label than the all-capturing “AI” term. You see Machine Intelligence up there in the picture, on par with all the other kinds. And damn, it is REALLY good at certain things.

But it’s bloody useless for doing a bunch of other things.

What do we do about this?

Put Machine Intelligence on your “Personal Board Of Advisors.”

You might not have thought about this quite in this way, but when we need to figure something out we always go to our Personal Board Of Advisors.

Sometimes, our PBOA is friends or family. Sometimes it’s our business coach or therapist. Sometimes it’s Dr. Google. If you’re in an organization, sometimes it’s an actual Board Of Advisors! Depending on where you’re tying to go and what you’re trying to do, your PBOA might have a very different makeup of participants.

And this is how it should be.

What’s going to happen in the coming decade is that our “Machine Intelligence Advisor” is going to get smarter and more capable. This is exciting!

But it’s going to be VERY important to remember that there are MANY other forms of intelligence we need on our PBOA, and we ought not get overly enthralled or distracted in thinking the Machine Intelligence Advisor can replace all these other things, because it can’t.

As you may remember, I already have a history of critiquing humanity’s latest rockstar CEO, but in this particular video, Sam almost gets there. His beginning framing is deeply misleading, but he arrives at a point I can absolutely agree with.

“I don’t think I’m going to be smarter than GPT-5. And I don’t feel sad about it because I think it just means that we’ll be able to use it to do incredible things. We want more science to get done. We want to enable researchers to do things they couldn’t do before. If scientists can do things because they have a crazy-high IQ tool… that’s just a win for all of us.”

So, don’t try to “outscore” this “crazy-high IQ” tool — that’s a waste of your time and energy.

Instead, make it part of your team and use it to do something that matters.